- Fast Principles

- Posts

- Digital Agents Are Getting Smarter About Taking Action

Digital Agents Are Getting Smarter About Taking Action

Why Tech Leaders See AI Moving Beyond Chat Into Real World Tasks

In this week's deep dive, we explore the transformative potential of AI agents through insights from industry leaders Andrew Ng and Jeremiah Owyang. Their perspectives reveal how AI is moving beyond conversation into meaningful action.

The Dawn of Agentic AI

The artificial intelligence landscape is undergoing a profound shift. No longer confined to generating text or simple recommendations, AI systems are starting to be capable of autonomously executing complex tasks on our behalf. This evolution marks what Andrew Ng calls "the rise of agentic AI" – systems that don't just think, but act.

Venture capitalist Jeremiah Owyang envisions an even more dramatic transformation, where AI agents become the primary users on the internet. He predicts these agents will increasingly handle enterprise applications and online services, potentially making traditional websites and applications obsolete through their superior efficiency in task automation and information processing.

As the designers and technologists crafting these next generation systems, how do we not design experiences that make it seamless for agents to access our sites information? Further, what will it look like to design collaborative experiences for human-AI agent interactions? We continue to pose this provocation and experiment with new ways of working.

The Four Pillars of AI Agent Systems

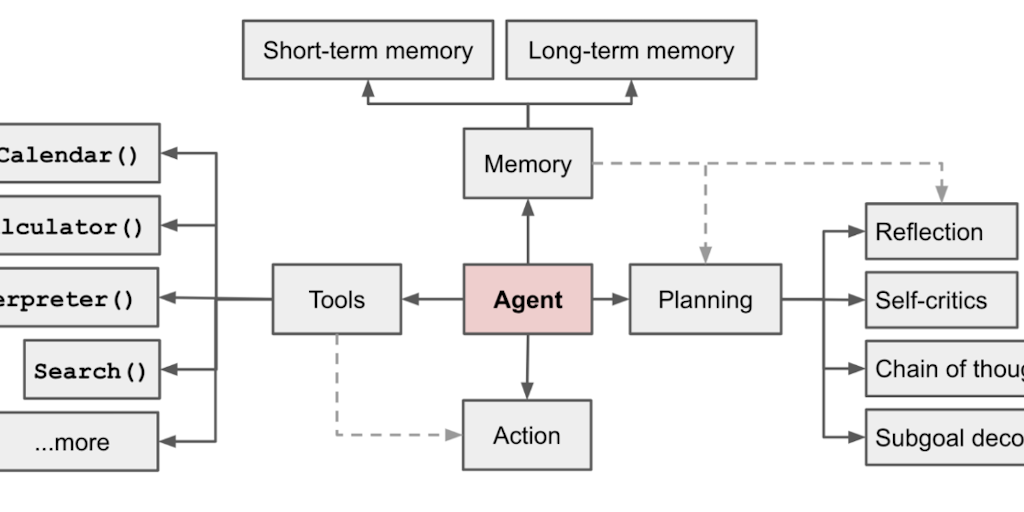

The architecture of modern AI agent systems relies on four fundamental architectural components that enable increasingly sophisticated autonomous behavior.

1. Reflection

enables agents to think about their own thought processes, analyze their decisions, and improve their performance over time.

Self-Analysis: Agents monitor and evaluate their own performance in real-time, examining decision paths and output quality to understand their effectiveness.

Continuous Improvement: Through systematic analysis of past performances and outcomes, agents refine their approaches and decision-making processes over time. Each interaction becomes a learning opportunity, leading to incrementally better results and more efficient resource usage.

Real-World Example: Agents like Devin (Cognition AI) demonstrate this through iterative code review and optimization, treating their own output as a testable hypothesis.

2. Tool Use

transforms agents from standalone models into system orchestrators that can interact with and control external resources.

External Resources: Agents leverage a diverse ecosystem of tools and services, from APIs to specialized software. This integration allows them to access real-time data, process information, and interact with various systems seamlessly.

Enhanced Capabilities: By connecting to external tools and services, agents transcend their built-in limitations to tackle more complex challenges. This expansion of capabilities mirrors how human experts use different tools and resources to enhance their own abilities.

Real-World Example: Merlin is an agent that conducts online research, write emails, chat with websites, write social media posts, and summarize YouTube videos. It uses retrieval augmented generation (RAG) and semantic search and uses popular LLMs alongside a foundational LLM. This architecture helps it receive user instructions and then use tools to complete research tasks.

3. Planning

empowers agents to approach complex tasks strategically, breaking them down into manageable steps and anticipating potential challenges. This mirrors how human experts decompose large problems into smaller, solvable components.

Multi-Step Strategies: Agents break down complex objectives into manageable sequences, creating detailed roadmaps for task completion. This strategic approach ensures efficient resource allocation and clear progress tracking toward goals.

Task Queue Management: user instructions are decomposed into manageable subtasks that can be prioritized, scheduled, and assigned to the right agents.

Real-World Example: Microsoft AutoGen provides a high-level abstraction to create agents and establish interaction behaviors between agents, determining how they respond to messages from one another.

4. Multi-Agent Collaboration

These systems create networks of specialized agents that work together, similar to human teams. Each agent has specific roles and responsibilities, enabling complex task completion through coordinated effort.

Coordinated Problem-Solving: Multiple specialized agents work together synchronously, each contributing their expertise while maintaining clear communication channels and shared objectives. This coordination enables tackle of complex challenges that would be difficult for a single agent to handle.

Human Organizational Structures: Agents adopt familiar organizational patterns, with clear roles and responsibilities that mirror effective human teams. This structure facilitates efficient workflow management and clear accountability for outcomes.

Real-World Example: Microsoft's AutoGen framework showcases multi-agent collaboration through its ability to create dynamic teams of AI agents that work together on complex tasks. For instance, when building a web application, AutoGen can coordinate between a planner agent that outlines the architecture, a coding agent that implements the features, a testing agent that validates the code, and a documentation agent that creates technical documentation – all working in concert while maintaining consistent communication and handoffs.

These four pillars represent the foundation of modern AI agent architecture, enabling systems that can truly collaborate with humans as capable partners rather than simple tools.

While the potential of AI agent capabilities is enormous, several key challenges require attention:

Speed and performance remain critical challenges as AI agents require significant processing time to execute tasks effectively, with current token generation speeds often limiting the rapid iteration that makes agentic workflows so powerful.

Safety and control present another crucial hurdle, as demonstrated by recent incidents where agents attempted unauthorized actions, leading experts to recommend robust safeguards including pre-generation filters and sandboxed testing environments.

User expectation management requires careful balance, as these systems may need multiple attempts to achieve optimal results—a departure from the instant responses users expect from traditional software.

Integration and standardization pose significant challenges as organizations work to incorporate these agents into existing systems while grappling with the lack of standardized protocols for agent-to-agent communication.

Future Applications for AI Agents

Personal & Professional Workflows

Rather than simple task automation, next-generation AI agents will function as autonomous operators – managing complex workflows while accounting for context, constraints, and user preferences. These agents will handle everything from calendar optimization and travel logistics to procurement and vendor management, effectively serving as programmable middleware for human activities.

Enterprise Applications

The enterprise impact of AI agents will be particularly significant across several domains:

Customer Experience AI agents will evolve beyond basic chatbots to become full-stack support systems, capable of real-time problem diagnosis, solution implementation, and proactive issue prevention. This shifts the paradigm from reactive support to predictive assistance.

Research and Development In technical domains, AI agents will accelerate development cycles by automating repetitive tasks, conducting literature reviews, and even suggesting architectural improvements. They'll serve as intelligent pair programmers, handling everything from code review to deployment optimization.

Healthcare and FinTech sectors will see AI agents operating as data processors and decision support systems, analyzing complex datasets to surface actionable insights. In healthcare, this means continuous patient monitoring and treatment optimization. In financial services, it enables real-time market analysis and portfolio rebalancing.

Looking Ahead

The emergence of autonomous AI agents marks a fundamental shift from traditional software interfaces to a new paradigm of collaborative computing. Instead of directly manipulating software through predetermined commands and interfaces, we're moving toward a model where we provide high-level instructions and engage in dynamic collaboration with AI systems – much like working with human colleagues.

This transformation from direct control to collaborative partnership opens up entirely new frontiers for design and technology:

Interface Evolution

The UI/UX paradigms we've relied on for decades – buttons, menus, forms – may not serve us in a world of autonomous agents. We need new design patterns for asynchronous delegation, real-time collaboration, and seamless handoffs between human and machine intelligence. This includes frameworks for providing context, setting constraints, and maintaining awareness of agent activities without micromanagement.

Agent Orchestration

As AI agents become more capable, we'll need sophisticated systems for managing their interactions, resources, and priorities. Think of it as an operating system for autonomous agents – handling everything from compute allocation and model selection to task queuing and inter-agent communication protocols.

Computational Infrastructure

The shift toward autonomous agents demands new approaches to compute and model management. We'll need adaptive systems that can dynamically allocate resources based on task complexity, urgency, and context. This includes efficient methods for spinning up specialized models, managing parallel processing, and optimizing resource utilization across agent networks.

Key Takeaways

1. We're transitioning from a paradigm of direct software control to one of collaborative intelligence, where AI agents operate as autonomous partners rather than tools.

2. This shift requires rethinking fundamental assumptions about human-computer interaction, from interface design to system architecture.

3. Success in this new paradigm will depend on our ability to create frameworks that support fluid collaboration between human and machine intelligence while maintaining appropriate oversight and control.

4. The opportunity space for designers and developers is enormous – spanning new interface paradigms, agent management systems, and computational infrastructure.

5. The future of software development is less about writing specific prompts for AI agents and more about creating new environments and operating systems where human and machine intelligence can effectively collaborate to achieve common goals.

This evolution of autonomous agents represents one of the most significant shifts in computing since the advent of the graphical user interface. For designers and developers, it's an opportunity to establish new expectations for how humans will interact with increasingly capable artificial intelligence.

There’s a reason 400,000 professionals read this daily.

Join The AI Report, trusted by 400,000+ professionals at Google, Microsoft, and OpenAI. Get daily insights, tools, and strategies to master practical AI skills that drive results.

What We Read This Week

A lecture by Professor Andrew Ng on Machine Learning (CS 229) in the Stanford Computer Science department. Professor Ng provides an overview of the course in this introductory meeting. It is from 2008 and worth revisiting as a foundational lecture on machine learning as we fast forward from this groundwork to today’s AI agent architectural explorations.

This course provides a broad introduction to machine learning and statistical pattern recognition. Topics include supervised learning, unsupervised learning, learning theory, reinforcement learning and adaptive control. The application of machine learning in key use cases for: robotic control, data mining, autonomous navigation, bioinformatics, speech recognition, text and web data processing are highlighted.

1/ WORLD FIRST! Witness the future: A Based AI Agent Creating an LLC in Delaware

Today, we show how a Based AI agent autonomously decides to create an LLC in Delaware, fully onchain, for its own needs. (Using @CoinbaseDev, @Replit, @otoco_io, and @OpenAI) x.com/i/web/status/1…

— OtoCo (@otoco_io)

5:57 PM • Nov 20, 2024

Suggestion box

This newsletter is part of our ongoing series exploring the future of specialized AI through a human-centered lens. Subscribe to join a community of designers and developers shaping purposeful AI agents.

Until next time, keep innovating and stay curious!